We are in a time of duplicity – a part of our life develops in a habitual way in what we consider to be the physical world, but we find another part that, in some cases, consumes much more time and to which many people dedicate more time than to the physical part. Many of our activities, memories and ideas are stored in an ethereal space called a Cloud, but what is the Cloud? Where is it? And what implications does it have on a global level?

The Cloud is a model of computer data storage where the digital information is kept in logical pools, meaning a collection of resources that are ready to use. The physical storage spans multiple servers and the physical environment is typically owned and managed by a hosting company. (A server is a piece of computer hardware or software that provides functionality for other programs or devices.)

Any individual or company can buy or lease storage capacity from the hosting company, either directly or indirectly. The actual market size of $5.5 billion will grow to an estimated $20 billion in the next five years with a wide portfolio of clients, among them the healthcare, retail, manufacturing, IT and financial sectors.

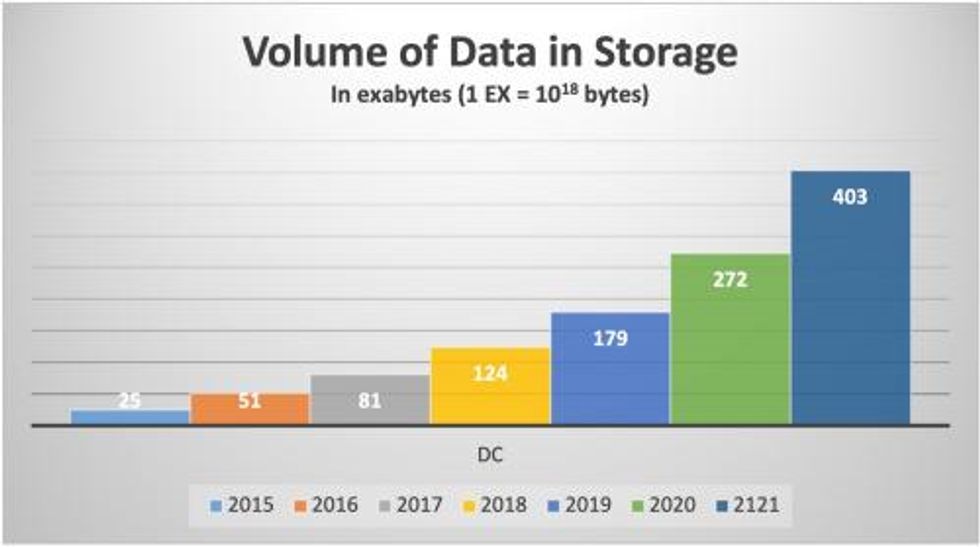

Figure 1: The increasing volume of data being stored in data centers

The need to reduce IT system downtime, joined with the lack of scalable IT infrastructure, has led to a major increase in the data center (DC) services market. Similar to some primary industries, the costs for data center downtime is high and, at the same time, the loss of business. Data center failures are expensive and potentially catastrophic due to business disruption and lost revenue. The best example of this situation happened in June 2018 when downtime on the Visa data center caused by a switch failure resulted in 5.2 million lost transactions, with the consequent loss of economic benefits that this entailed.

One of the main objectives of the hosting company is to keep the data available and accessible 24/7 and maintain the facility running. Like any other industry, the DC depends on the supply of electrical energy to maintain and give life to its business. The main power source comes from the public network, but each center needs some backup equipment to generate electrical energy in case the main source is lost. Due to its importance to the business, this backup equipment must be constantly preserved and maintained.

As in any industrial application, fluids are an intrinsic part of any equipment. While the goal of data center operators is to bring the data center back up to operation as quickly as possible, fluid condition monitoring ensures backup systems are available when needed. Analyzing industrial fluids to determine if they are under adequate working conditions will allow the production process to maintain its normal course. The most common fluids in this scenario are diesel, antifreeze and lube oil for the generators; turbine oil if the energy is produced in-house, stored and used as new fuel; refrigeration oil and refrigerants; cooling water and coolants; and transformer dielectric fluids.

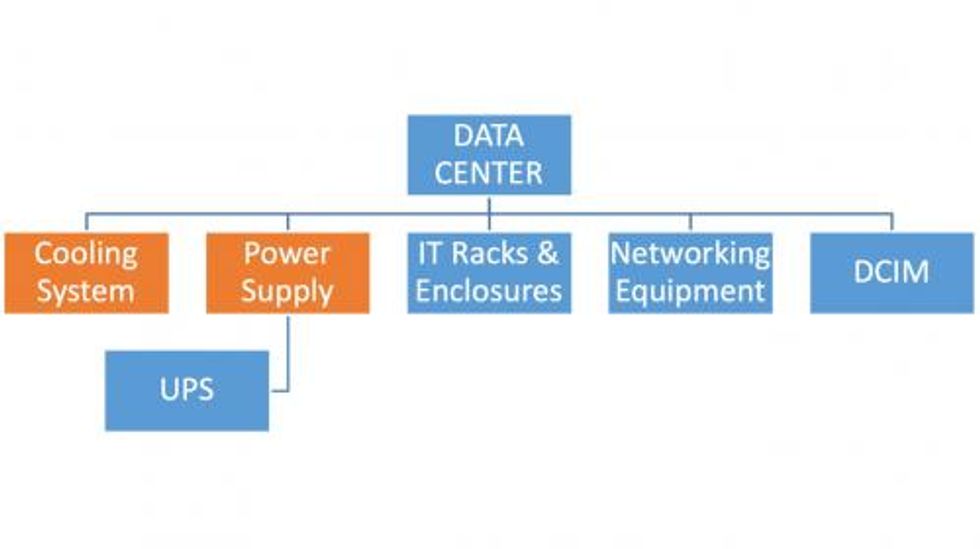

DATA CENTER ARCHITECTURE

Today’s intelligent systems have occupied a highly relevant place in the areas of business administration. Many DCs have intelligent systems that help reduce energy consumption and maintain power usage effectiveness (PUE). This type of software allows operators to monitor, measure and control the most important data center parameters, covering not only IT equipment, but also supporting critical infrastructure elements, like cooling and power systems. It helps data center supervisors achieve maximum energy efficiency and avoid equipment failure that leads to downtime.

Fluid condition monitoring encompasses a complete range of testing to access the condition of the oil, fuel and coolants in backup systems. Condition monitoring helps ensure that mission critical systems are continuously available. Although the issue of quality control is a good reason when analyzing fluids in a DC, one of the most important issues is determining the failure mode of the fluid and being able to anticipate it.

A recent study by the Uptime Institute calculated the cost of downtime. Approximately 33 percent of all incidents cost enterprises over $250,000, with 15 percent of downtime episodes costing more than $1 million. Therefore, ensuring the physical safety and appropriate operation of all equipment, fluids and other critical components has emerged as the top agenda for the custodians of infrastructure and operations.

From the maintenance reliability perspective, the well-known P-F curve applies not only to critical machines and components, but to industrial fluids. Like any other industrial element, the oil, diesel, or coolants can be susceptible to failure and, therefore, the determination of the point where they meet is highly critical.

Figure 2: Data center architecture

Fluid analysis of this critical equipment is a cost-effective way to understand the condition of the DC’s equipment and the fluids in them. Using fluid condition monitoring as part of the DC’s power and cooling systems’ preventive maintenance strategy ensures protection against downtime risk. For example, testing the storage diesel fuel in power generators is critical for the reliability of facilities to operate in the event of outside power loss. For determining the serviceability of on-site stored fuel, testing should comply with the National Fire Protection Association’s NFPA110 and ASTM International’s ASTM D975.

ANALYSIS OF INDUSTRIAL FLUIDS NOW MORE IMPORTANT THAN EVER

It is not something new that analysis of oils, greases, coolants and others have a high value of importance within the reliability chain of any industrial plant. However, within some critical sectors, such as storage of data, industrial fluid analysis plays a vital role and it is not only for issues of quality control or guarantees.

The explosion that has taken place in the last two years with respect to the growth of the DC sector has had a very large impact on the maintenance typology and has gone from operating in small spaces to centers that can occupy thousands of square feet. Like their predecessors, they require only two things to operate: electrical source of power and controlled temperature.

Figure 3: The top reasons why industrial managers analyze fluids

The reason for performing a fluid analysis differs between industrial managers and DC facility managers. Among industrial managers, the reasons would most likely fall within one of these groups: Quality reasons; warranty reasons; the supplier offers it for free (even though this is not entirely true); and maintenance purposes. When a DC forum was asked for their top reason, it was simply because fluid analysis is part of the vertebral column of their daily functioning. DC facility managers treat lubricants as assets, not as consumables. What is the difference between the industrial sector and the DC sector so that the concept of assets as an integral part of the production chain encompasses industrial fluids?

WHERE TO START THE FLUID ANALYSIS

Figure 4 illustrates the four main areas where fluid analysis should be started. These are the areas where fluid analysis can result in minimized downtime to avoid potential losses. Table 1 outlines the different types of analyses that can be conducted.

Figure 4: Areas to start fluid analysis

Table 1 – Fluid Analysis on Applications

SHORT CASE STUDY REVIEW

The power generator group of a data center worked with a 32cSt viscosity oil, the usual for this type of equipment. Without warning, the temperature of the oil in service began to increase to 5 degrees Celsius above normal, with the consequent risk of an automatic shutdown.

All the alerts and internal protocols were put in place to find the origin of the problem and to be able to correct it in time before an undesired scenario occurred. After three days trying to find the source of the problem, with the temperature reaching the limit and the color of the oil getting darker, the original equipment manufacturer recommended sending an oil sample to the laboratory. After a basic analysis, and in less than four hours, the laboratory found the origin of the problem. Due to a cross contamination with another fluid, the antifoam additive was depleted.

The antifoam can be affected by hot spots, water, pollution, or other unwanted cross contamination situations. Without the protection of this compound, the air that is retained in the oil may stay longer than it should. If the air bubbles pass through a higher pressure zone (e.g., bearing journals, discharge side of the pump, among others), they will collapse violently. This problem alone can cause serious reliability issues to the oil initially and later to the equipment. The compression of the air bubbles retained in the oil in that pressurized zone results in an adiabatic stage. The collapse of the air-oil mixture generates an inevitable ignition, producing high temperatures around the space where this is happening.

The Fourier-transform infrared spectroscopy (FTIR) graph in Figure 5 shows the difference between the new oil and the one in service, where the loss of the antifoam additive and the generation of by-products from the chemical degradation of the oil can be seen.

Figure 5: FTIR graph showing difference between new and in service oil and the chemical degradation of the oil caused by antifoam additive loss

This process is known as microdieseling and will eventually lead to the oxidative degradation of the lubricant. At the same time, the oil operating temperature will increase, the potential ratio of oxidation will increase and a degradative circle of the oil will be generated until it fails.

An adequate analysis with sufficient frequency will allow the facility manager to maintain the condition of the industrial fluids according to what has been established, thereby minimizing any unnecessary losses.

The fluid analysis of the DC must be in accordance with the degree of criticality of these centers. In this sense, one of the most critical missions of fluid condition monitoring is to provide continuity, monitoring and quality throughout the entire life of fluids in service.